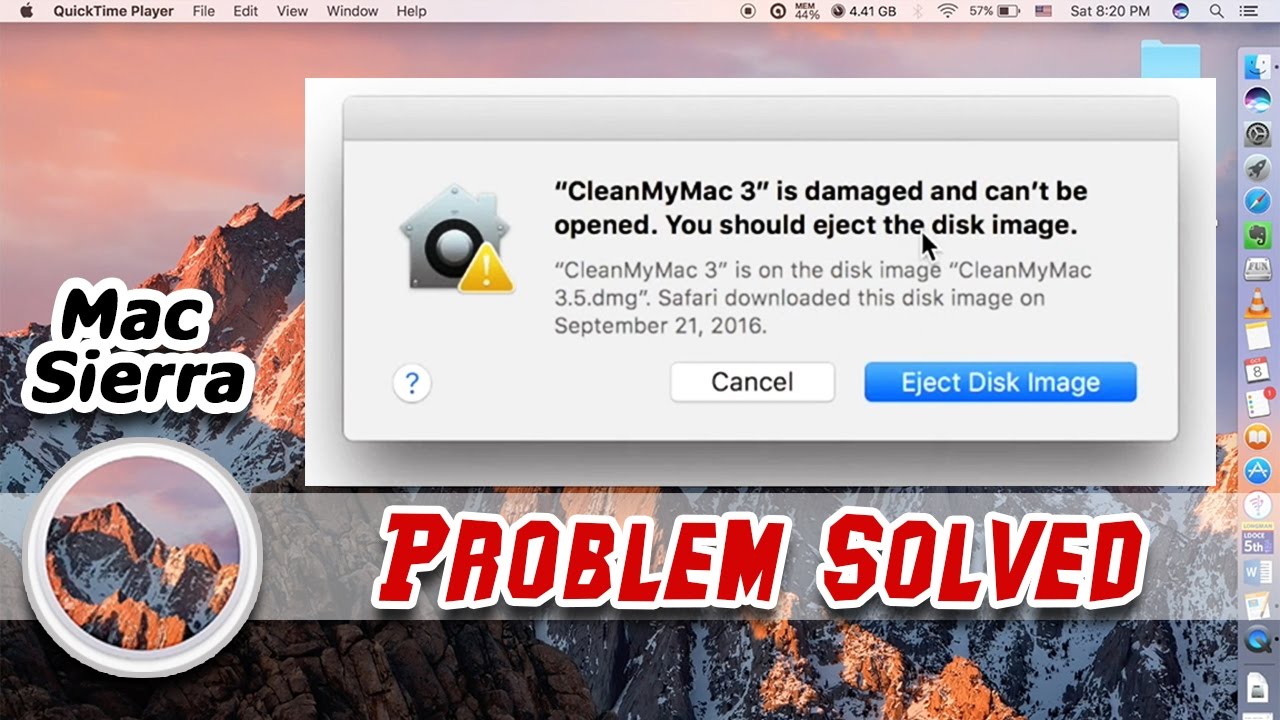

Other minor tweaks to improve protection and minimize image perturbations. Download the Fawkes Software: (new) Fawkes.dmg for Mac (v1.0) DMG file with installer app Compatibility: MacOS 10.13, 10.14, 10.15, 11.0 (new) Fawkes.exe for Windows (v1.0) EXE file Compatibility: Windows 10. XnView MP is a versatile and powerful photo viewer, image management, image resizer.XnView is one of the most stable, easy-to-use, and comprehensive photo editors. All common picture and graphics formats are supported (JPEG, TIFF, PNG, GIF, WEBP, PSD, JPEG2000, OpenEXR, camera RAW, HEIC, PDF, DNG, CR2). .dmg – It is also popular as “Mac OS X Disk Image”, basically it contains raw block data that are typically compressed and encrypted sometimes, it contains Mountable disk image that are created in Mac OS X, it is also helpful to mount the virtual disk on the desktop when it is opened. Image Not Recognized Mac Dmg Mac. Close any Finder windows that have been left open. Eject the disk image (not the.DMG file). Click on its desktop icon, then press CMD+E. Delete the.DMG file by dragging it. Several Mac users have experienced issues with DMG files. Some Macs aren't able to recognize the DMG files created as a backup. Here's how to fix the issue. Dec 30, 2011 Mac OS X System & Mac Software. Dmg Files Not Recognized. Thread starter AceMac; Start date Jul 14, 2005 A. AceMac Registered.

Has anyone seen this? When trying to mount a dmg (disk image) file on Mac OS, getting the very odd error - 'no mountable file systems'? Well, here’s what to do:

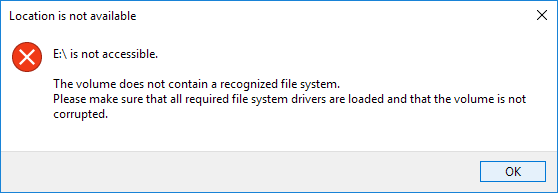

Mac systems are one of the best working systems in the world. But there are all chances that you can get a problem with Mac machines and you have to face mac error no mountable file systems issue anytime. The error “no mountable file systems” can cause Mac machine to work slow or give some unexpected problems. Many times due to these problems a Mac machine may crash. When this error occurs, you may not be able to access your hard drive or your Mac applications which you have installed on your system.

If you are facing Mac error no mountable file systems, then you don’t need to panic. If your mac system is running slow, not working properly or hard disk is inaccessible or you have mac data loss due to Mac error no mountable file systems, then we are giving you a fix to resolve your problem. We are providing here the complete guide to recover Mac data.

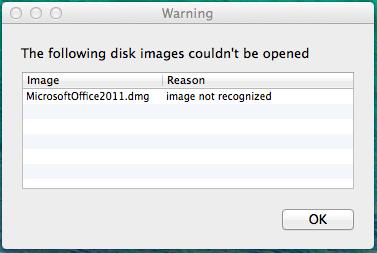

Office for Mac 2011 download file says disk image could not be opened because the file is not recognized I just purchase Office for Mac 2011 and when I download it a message comes up that says the disk image could not be opened because the file is not recognized. OS X El Capitan suddenly can't open DMG files. Ask Question Asked 3 years, 7 months ago. And managed to open.dmg files by going into Disk UtilityFileOpen Disk Image. Once opened in Disk Utility, Double Click (Right Click) the icon and click show in Finder. Seeing as you can still basically use the Mac, I would recommend reinstalling.

How To Install Dmg File

Thanks for contributing an answer to Stack Overflow! Please be sure to answer the question.Provide details and share your research! Asking for help, clarification,. Feb 18, 2013 Hi I just bought Mountain Lion from the Mac App Store. I need to make a backup USB. But when I try to open InstallESD.dmg, it says 'The following disc images couldnt be opened Install ESD.dmg Not recognized. Could anyone help me? Any help would be appreciated!

Image Not Recognized Dmg Files Mac Os

Mac error “no mountable file systems” can occur due to these reasons causing mac data loss!

The above-said error can be caused by various reasons creating a panic situation in your mind. Here are some few of them:

- Accidentally deleting any Mac files

- Power failure

- Read/Write operation interruption

- Unsupported data

- Virus attack

- Bios setting modifications

- Header file corruption

- Corruption of catalog files node

- Boot sector problem

- Issues in program installations, etc.

Fixing the error - no mountable file systems problem by Manual Method:

If you are facing Mac error no mountable file systems problem, follow these steps:

- In most cases, the downloaded dmg file is actually corrupt or had an error downloading. If possible, try downloading the dmg again, turning off any download assistant plug-ins you may have. You can try downloading the file in a different browser as well. Or if you don't need to be logged in to the site to download the file and you want to be fancy, you can try curl -O url in Terminal to download the file.

- Reboot your Mac if you haven't already tried that. Apparently, there is an issue sometimes after opening too many dmg files, that is fixed with a reboot.

- Try mounting the DMG on the command line in Terminal. We will at least get some sort of useful error message to go on if it still fails:

- Open Terminal: In Spotlight, the search magnifying glass at the upper right corner of your screen, search for Terminal, and press enter to open the Terminal app

- Type hdiutil attach into the terminal. Don't press enter yet

- Drag the dmg file from your Finder window onto the Terminal window and let go. This will fill in the location of the dmg file into your Terminal window

- Press enter

Mac Could Not Open Image Dmg Software

- Think about if you have any kind of security policies on this machine to prevent writing to external drives (thumb drives, optical drives, etc.).

NOTE: Manual recovery method to fix Mac error no mountable file systems problem is never easy and you need to be technically sound to recover the Mac files through this method. Even a slight mistake can cause a huge problem for your Mac. So for a newbie, it is always recommended to go for a Mac data recovery tool to get rid of mac error no mountable file systems problem.

Mac Data Recovery Software: Get rid of Mac error no mountable file systems using software

If you are suffering from Mac data loss, then Remo Mac data recovery Software can solve your problem. This is a simple, reliable and easy to use tool which will recover your lost Mac data in no time. This tool can recover corrupted Mac data from both external and internal drives. It scans the whole drive with its powerful algorithm and recovers all lost files and partitions very easily. You can easily use this tool to recover deleted or lost Mac files.

You can easily install Mac data recovery software on your system and use it without any technical knowledge. This software is built keeping in mind that the user will not be a technical person. It is so easy and simple to use that a novice user can run it and get their Mac data back. The tool is also helpful in recovering data from damaged partitions of various file systems. For instance, if you are looking to recover files from HFS plus partition that has been damaged, you can make use of Remo Mac data recovery tool and get your job done in a few simple steps.

Open Image File

Follow the steps mentioned below if you want to recover data after encountering No Mountable File System error.

Recover Data After Encountering No Mountable File System Error:

Download, install and launch Remo Recover Mac on the affected Mac system.

Step 1: Select Recover Volumes/ Drives from the main screen and click on Volume Recovery from the following screen.

Step 2: Check if the error encountered drive is listed. If not, then select either of the two options given at the bottom of the software window and click on Next.

Jun 08, 2012 To learn more about DMG and shop for DMG supplements, please visit us at In this clip, Dr. Roger Kendall shares some excitin. Kendall, Ph.D., is considered to be the leading expert in the biochemistry and health benefits of DMG. He has spent over 30 years conducting research on this vital nutrient and has established DMG's theraputic role as an immune modulator, antioxidant and metabolic enhancer in human and animal health. Dmg supplement uses dr roger v kendall on you tube.

Step 3: Select Advance Scan as the Standard Scan cannot recognize the data which is present within the “No Mountable File System” error encountered drive.

Step 4: Skip this option if you want to recover all of the data present within the inaccessible drive. Or, you can select only the important files and click on Next.

Step 5: Once the software has completed scanning the inaccessible drive, click on a file and select Preview to verify the file before saving the recovered files.

Note: Do not save the recovered files on the same drive from where the data has been recovered. Save it on any other desired location.

Open DMG File

DMG is used for disk image files on Macintosh computers running Mac OS X. This file extension replaces the older file extension IMG which was discontinued during the release of later series of Mac operating systems. You can open DMG file on Mac, Linux, and Windows operating systems but it will require additional software to be installed on Windows. Note that on Windows and Linux you cannot open every DMG file, since there are certain software limitations on DMG format variation.

DMG files are used by Apple for software distribution over the internet. These files provide features such as compression and password protection which are not common to other forms of software distribution file formats. DMG files are native to Mac OS X and are structured according to Universal Disk Image Format (UDIF) and the New Disk Image Format (NDIF). They can be accessed through the Mac OS Finder application by either launching the DMG file or mounting it as a drive.

DMG is also referred to as the Apple’s equivalent to MSI files in Windows PC. Non-Macintosh systems may access DMG files and extract or convert them to ISO image files for burning. Several applications are designed to offer this solution for Windows systems.

7-Zip and DMG Extractor are the best options to open DMG file on Windows because they are compatible with the most DMG variations. For Linux a built-in 'cdrecord' command can be issued to burn DMG files to CD's or DVD's.

Aside from the Finder application, you can open DMG files through Apple Disk Utility, Roxio Toast, and Dare to be Creative iArchiver for Mac platform. On the other hand, additional applications such Acute Systems TransMac, DMG2IMG, and DMG2ISO can be installed on Windows to fully support the files.

Read how you can open DMG files on Mac OS, Windows and Linux.

DMG files are transferred over e-mail or internet using application/x-apple-diskimage multipurpose internet mail extensions (MIME) type.

Following file types are similar to DMG and contain disk images:

Mac Dmg File

- ISO File - ISO disk image file

- IMG File - IMG disk image file

- VHD/VHDX File - Virtual Hard Drive image file

| Introduction | Technical Paper | Downloads | Media/Press | FAQ |

- 4-23: v1.0 release for Windows/MacOS apps and Win/Mac/Linux binaries!

- 4-22: Fawkes hits 500,000 downloads!

- 1-28: Adversarial training against Fawkes detected in Microsoft Azure (see below)

- 1-12: Fawkes hits 335,000 downloads!

- 8-23: Email us to join the Fawkes-announce mailing list for updates/news on Fawkes

- 8-13: Fawkes paper presented at USENIX Security 2020

News: Jan 28, 2021. It has recently come to our attention that there was a significant change made to the Microsoft Azure facial recognition platform in their backend model. Along with general improvements, our experiments seem to indicate that Azure has been trained to lower the efficacy of the specific version of Fawkes that has been released in the wild. We are unclear as to why this was done (since Microsoft, to the best of our knowledge, does not build unauthorized models from public facial images), nor have we received any communication from Microsoft on this. However, we feel it is important for our users to know of this development. We have made a major update (v1.0) to the tool to circumvent this change (and others like it). Please download the newest version of Fawkes below.

2020 is a watershed year for machine learning. It has seen the true arrival of commodized machine learning, where deep learning models and algorithms are readily available to Internet users. GPUs are cheaper and more readily available than ever, and new training methods like transfer learning have made it possible to train powerful deep learning models using smaller sets of data.

But accessible machine learning also has its downsides. A recent New York Times article by Kashmir Hill profiled clearview.ai, an unregulated facial recognition service that has downloaded over 3 billion photos of people from the Internet and social media and used them to build facial recognition models for millions of citizens without their knowledge or permission. Clearview.ai demonstrates just how easy it is to build invasive tools for monitoring and tracking using deep learning.

So how do we protect ourselves against unauthorized third parties building facial recognition models that recognize us wherever we may go? Regulations can and will help restrict the use of machine learning by public companies but will have negligible impact on private organizations, individuals, or even other nation states with similar goals.

The SAND Lab at University of Chicago has developed Fawkes1, an algorithm and software tool (running locally on your computer) that gives individuals the ability to limit how unknown third parties can track them by building facial recognition models out of their publicly available photos. At a high level, Fawkes 'poisons' models that try to learn what you look like, by putting hidden changes into your photos, and using them as Trojan horses to deliver that poison to any facial recognition models of you. Fawkes takes your personal images and makes tiny, pixel-level changes that are invisible to the human eye, in a process we call image cloaking. You can then use these 'cloaked' photos as you normally would, sharing them on social media, sending them to friends, printing them or displaying them on digital devices, the same way you would any other photo. The difference, however, is that if and when someone tries to use these photos to build a facial recognition model, 'cloaked' images will teach the model an highly distorted version of what makes you look like you. The cloak effect is not easily detectable by humans or machines and will not cause errors in model training. However, when someone tries to identify you by presenting an unaltered, 'uncloaked' image of you (e.g. a photo taken in public) to the model, the model will fail to recognize you.

Fawkes has been tested extensively and proven effective in a variety of environments and is 100% effective against state-of-the-art facial recognition models (Microsoft Azure Face API, Amazon Rekognition, and Face++). We are in the process of adding more material here to explain how and why Fawkes works. For now, please see the link below to our technical paper, which was presented at USENIX Security Symposium, held on August 12-14, 2000.

The Fawkes project is led by two PhD students at SAND Lab, Emily Wenger and Shawn Shan, with important contributions from Jiayun Zhang (SAND Lab visitor and current PhD student at UC San Diego) and Huiying Li, also a SAND Lab PhD student. The faculty advisors are SAND Lab co-directors and Neubauer Professors Ben Zhao and Heather Zheng.

1The Guy Fawkes mask, a la V for Vendetta.

In addition to the photos of the team cloaked above, here are a couple more examples of cloaked images and their originals. Can you tell which is the original? (Cloaked image of the Queen courtesy of TheVerge).

Publication & Presentation

Fawkes: Protecting Personal Privacy against Unauthorized Deep Learning Models.

Shawn Shan, Emily Wenger, Jiayun Zhang, Huiying Li, Haitao Zheng, and Ben Y. Zhao.

In Proceedings of USENIX Security Symposium 2020. ( Download PDF here )

Downloads and Source Code - v1.0 Release!

- NEW! Fawkes v1.0 is a major update. We made the following updates to significantly improve the protection and software reliability.

- We updated the backend feature extractor to the-state-of-art ArcFace models.

- We injected additional randomness to the cloak generation process through randomized model selection.

- We migrated the code base from TF 1 to TF 2, which resulted in a significant speedup and better compatibility.

- Other minor tweaks to improve protection and minimize image perturbations.

- Download the Fawkes Software: (new) Fawkes.dmg for Mac (v1.0)

DMG file with installer app

Compatibility: MacOS 10.13, 10.14, 10.15, 11.0 (new) Fawkes.exe for Windows (v1.0)

EXE file

Compatibility: Windows 10

Setup Instructions: For MacOS, download the .dmg file and double click to install. If your Mac refuses to open because the APP is from an unidentified developer, please go to System Preference>Security & Privacy>General and click Open Anyway. - Download the Fawkes Executable Binary:

Fawkes binary offers additional options on selecting different parameters. Check here for more information on how to select the best parameters for your use case.

Download Mac Binary (v1.0)

Download Windows Binary (v1.0)

Download Linux Binary (v1.0)

For binary, simply run './protection -d imgs/' - Fawkes Source Code on Github, for development.

If you have any issues running Fawkes, please feel free to ask us by email or raising an issue in our Github repo. Check back often for new releases, or subscribe to our (very) low-volume mailing list for Fawkes announcements and news.

Media and Press Coverage

We stopped trying to keep this up to date. If you see an interesting article talking about Fawkes that's not listed here (especially if in non-English format), email us to let us know. Thanks.

Image Not Recognized Dmg Files Mac Os

- The Verge, James Vincent: Cloak your photos with this AI privacy tool to fool facial recognition

- New York Times, Kashmir Hill: This Tool Could Protect Your Photos From Facial Recognition

- UChicago CS, UChicago CS Researchers Create New Protection Against Facial Recognition

With more commentary and responses to Clearview.ai CEO's comments in NY Times article. - Tech by Vice, Researchers Want to Protect Your Selfies From Facial Recognition

- Die Zeit (Germany), Christoph Drosser: Die unsichtbare Maske (The Invisible Mask)

- TechSpot, Adrian Potoroaca: University of Chicago researchers are building a tool to protect your pictures from facial recognition systems

- The Register (UK), Sick of AI engines scraping your pics for facial recognition? Here's a way to Fawkes them right up

- Gizmodo, Shoshana Wodinsky: This Algorithm Might Make Facial Recognition Obsolete

- South China Morning Post (HongKong), Xinmei Shen: Anti-facial recognition tool Fawkes changes your photos just enough to stump Microsoft and Amazon

- ZDNet, Fawkes protects your identity from facial recognition systems, pixel by pixel

- Schneier on Security, Fawkes: Digital Image Cloaking

- SlashGear, Brittany A. Roston: Fawkes photo tool lets anyone secretly 'poison' facial recognition systems

- Digital Photography Preview, Brittany Hillen: Researchers release free AI-powered Fawkes image privacy tool for 'cloaking' faces

- MIT Technology Review China, article

- OneZero on Medium, This Filter Makes Your Photos Invisible to Facial Recognition

- Built in Chicago, Nona Tepper: UChicago Researchers Made a Photo-Editing Tool That Hides Your Identity From Facial Recognition Algorithms

- Digital Village, Episode 22: Cloaking Your Photos with Fawkes - Language Models

- The American Genius, Desmond Meagley: The newest booming business: Hiding from facial recognition

- Futurity, 'Fawkes' tool protects you from facial recognition online

- Communications of the ACM, Paul Marks: Blocking Facial Recognition

- Daily Mail (UK), Stacy Liberatore: AI program named after V For Vendetta masks could help protect your photos...

- Today Online (Singapore), This tool could protect your photos from facial recognition

- El universal (Mexico), Esta herramienta protege tus fotos del reconocimiento facial

- Radio Canada, This tool can keep your photos safe from facial recognition algorithms

- DigiArena.CZ (Czech), Adam Kos: Do you care about your privacy? So try this software. It also bypasses face recognition.

- Sina Tech (China), Put this 'cloak' on your photos to foil state of the art facial recognition systems

- Instalki.pl (Poland), Ten algorytm pozwoli Ci uchronic sie przed systemami rozpoznawania twarzy

- Link Estadao (Brazil), Esta ferramenta tenta proteger suas fotos do reconhecimento facial

- Manual do Usuario (Brazil), Rodrigo Ghedin: O algoritmo anti-reconhecimento facial

- Analytics India, Sejuti Das: Can This AI Filter Protect Human Identities From Facial Recognition System?

- News18 (India), Shouvik Das: Privacy vs Facial Recognition: Fawkes Aims to Help Protect Your Public Photos From Misuse

- WION News (India), Engineers develop tool called 'Fawkes' to protect online photos from facial recognition

- Pttl.gr (Greece), Fawkes: Special free software that 'secures' your photos against face recognition models!

- IGuru (Greece), Fawkes: protect your photos from face recognition

- Golem.de (Germany), Fawkes soll vor Gesichtserkennung schutzen

- Heise Online (Germany), Verzerrungs-Algorithmus Fawkes will Gesichtserkennung verhindern

- We Demain, Morgane Russeil-Salvan: Reconnaissance faciale : voici comment y echapper sur les reseaux sociaux

- Developpez.com (France), Des chercheurs mettent au point Fawkes, un <

> numerique - Asia News Day, Computer engineers develop tool that hopes to protect one's online photos from facial recognition

- BundleHaber (Turkey), The Application That Prevents Face Recognition Systems from Recognizing Photos: Fawkes

- TechPadi (Africa), Jide Taiwo: Fawkes Digital Image Cloaking Software Could Help Beat Facial Recognition Algorithms

- En Segundos (Panama), Esta herramienta podria proteger tus fotografias del reconocimiento facial

- TechNews Taiwan, article

- KnowTechnie, Ste Knight: You can now prevent your online photos from being used by facial recognition systems

- TechXplore, Peter Grad: Image cloaking tool thwarts facial recognition programs

Dmg Files In Windows

Frequently Asked Questions

- How effective is Fawkes against 3rd party facial recognition models like ClearView.ai?

We have extensive experiments and results in the technical paper (linked above). The short version is that we provide strong protection against unauthorized models. Our tests against state of the art facial recognition models from Microsoft Azure, Amazon Rekognition, and Face++ are at or near 100%. Protection level will vary depending on your willingness to tolerate small tweaks to your photos. Please do remember that this is a research effort first and foremost, and while we are trying hard to produce something useful for privacy-aware Internet users at large, there are likely issues in configuration, usability in the tool itself, and it may not work against all models for all images. - How could this possibly work against DNNs? Aren't they supposed to be smart?

This is a popular reaction to Fawkes, and quite reasonable. We hear often in popular press how amazingly powerful DNNs are and the impressive things they can do with large datasets, often detecting patterns where human cannot. Yet the achilles heel for DNNs has been this phenomenon called adversarial examples, small tweaks in inputs that can produce massive differences in how DNNs classify the input. These adversarial examples have been recognized since 2014 (here's one of the first papers on the topic), and numerous defenses have been proposed over the years since (and some of them are from our lab). Turns out they are extremely difficult to remove, and in a way are a fundamental consequence of the imperfect training of DNNs. There have been multiple PhD dissertations written already on the subject, but suffice it to say, this is a fundamentally difficult thing to remove, and many in the research area accept it now as a necessary evil for DNNs.

The underlying techniques used by Fawkes draw directly from the same properties that give rise to adversarial examples. Is it possible that DNNs evolve significantly to eliminate this property? It's certainly possible, but we expect that will require a significant change in how DNNs are architected and built. Until then, Fawkes works precisely because of fundamental weaknesses in how DNNs are designed today. - Can't you just apply some filter, or compression, or blurring algorithm, or add some noise to the image to destroy image cloaks?

As counterintuitive as this may be, the high level answer is no simple tools work to destroy the perturbation that form image cloaks. To make sense of this, it helps to first understand that Fawkes does not use high-intensity pixels, or rely on bright patterns to distort the classification value of the image in the feature space. It is a precisely computed combination of a number of pixels that do not easily stand out, that produce the distortion in the feature space. If you're interested in seeing some details, we encourage you to take a look at the technical paper (also linked above). In it we present detailed experimental results showing how robust Fawkes is to things like image compression and distortion/noise injection. The quick takeaway is that as you increase the magnitude of these noisy disruptions to the image, protection of image cloaking does fall, but slower than normal image classification accuracy. Translated: Yes, it is possible to add noise and distortions at a high enough level to distort image cloaks. But such distortions will hurt normal classification far more and faster. By the time a distortion is large enough to break cloaking, it has already broken normal image classification and made the image useless for facial recognition. - How is Fawkes different from things like the invisibility cloak projects at UMaryland, led by Tom Goldstein, and other similar efforts?

Fawkes works quite differently from these prior efforts, and we believe it is the first practical tool that the average Internet user can make use of. Prior projects like the invisibility cloak project involve users wearing a specially printed patterned sweater, which then prevents the wearer from being recognized by person-detection models. In other cases, the user is asked to wear a printed placard, or a special patterned hat. One fundamental difference is that these approaches can only protect a user when the user is wearing the sweater/hat/placard. Even if users were comfortable wearing these unusual objects in their daily lives, these mechanisms are model-specific, that is, they are specially encoded to prevent detection against a single specific model (in most cases, it is the YOLO model). Someone trying to track you can either use a different model (there are many), or just target users in settings where they can't wear these conspicuous accessories. In contrast, Fawkes is different because it protects users by targeting the model itself. Once you disrupt the model that's trying to track you, the protection is always on no matter where you go or what you wear, and even extends to attempts to identify you from static photos of you taken, shared or sent digitally. - How can Fawkes be useful when there are so many uncloaked, original images of me on social media that I can't take down?

Fawkes works by training the unauthorized model to learn about a cluster of your cloaked images in its 'feature space.' If you, like many of us, already have a significant set of public images online, then a model like Clearview.AI has likely already downloaded those images, and used them to learn 'what you look like' as a cluster in its feature space. However, these models are always adding more training data in order to improve their accuracy and keep up with changes in your looks over time. The more cloaked images you 'release,' the larger the cluster of 'cloaked features' will be learned by the model. At some point, when your cloaked cluster of images grows bigger than the cluster of uncloaked images, the tracker's model will switch its definition of you to the new cloaked cluster and abandon the original images as outliers. - Is Fawkes specifically designed as a response to Clearview.ai?

It might surprise some to learn that we started the Fawkes project a while before the New York Times article that profiled Clearview.ai in February 2020. Our original goal was to serve as a preventative measure for Internet users to inoculate themselves against the possibility of some third-party, unauthorized model. Imagine our surprise when we learned 3 months into our project that such companies already existed, and had already built up a powerful model trained from massive troves of online photos. It is our belief that Clearview.ai is likely only the (rather large) tip of the iceberg. Fawkes is designed to significantly raise the costs of building and maintaining accurate models for large-scale facial recognition. If we can reduce the accuracy of these models to make them untrustable, or force the model's owners to pay significant per-person costs to maintain accuracy, then we would have largely succeeded. For example, someone carefully examining a large set of photos of a single user might be able to detect that some of them are cloaked. However, that same person is quite possibly capable of identifying the target person in equal or less time using traditional means (without the facial recognition model). - Can Fawkes be used to impersonate someone else?

The goal of Fawkes is to avoid identification by someone with access to an unauthorized facial recognition model. While it is possible for Fawkes to make you 'look' like someone else (e.g. 'person X') in the eyes of a recognition model, we would not consider it an impersonation attack, since 'person X' is highly likely to want to avoid identification by the model themselves. If you cloaked an image of yourself before giving it as training data to a legitimate model, the model trainer can simply detect the cloak by asking you for a real-time image, and testing it against your cloaked images in the feature space. The key to detecting cloaking is the 'ground truth' image of you that a legitmate model can obtain, and unauthorized models cannot. - How can I distinguish photos that have been cloaked from those that have not?

A big part of the goal of Fawkes is to make cloaking as subtle and undetectable as possible and minimize impact on your photos. Thus it is intentionally difficult to tell cloaked images from the originals. We are looking into adding small markers into the cloak as a way to help users identify cloaked photos. More information to come. - How do I get Fawkes and use it to protect my photos?

We are working hard to produce user-friendly versions of Fawkes for use on Mac and Windows platforms. We have some initial binaries for the major platforms (see above). Fawkes is also available as source code, and you can compile it on your own computer. Feel free to report bugs and issues on github, but please bear with us if you have issues with the usability of these binaries. Note that we do not have any plans to release any Fawkes mobile apps, because it requires significant computational power that would be challenging for the most powerful mobile devices. - We are adding more Q&A soon. If you don't see your question here, please email us and we will add it to the page soon.